Artificial Intelligence is evolving fast. One of its most controversial areas is affective computing, where machines learn to detect and respond to human emotions. By reading facial expressions, tone of voice, and even heart rate, AI claims it can understand how people feel.

But when these systems target teenagers, the risks go far beyond technology. They touch privacy, mental health, and ethics.

What Is Affective Computing?

Affective computing uses AI to analyze emotions. Examples include:

- Apps that detect stress or sadness in a teen’s voice.

- Video games that change difficulty based on frustration.

- Social platforms that recommend content based on mood.

This may sound innovative, but it raises critical risks for young people.

Emotional Surveillance and Privacy

Teenagers already share a huge amount of personal data. Adding emotional data means their inner feelings become a product.

Key questions:

- Who controls this emotional information?

- Will companies sell it to advertisers?

- Could schools or employers use it later?

For teens, the idea of being constantly “read” can create stress, anxiety, and self-censorship.

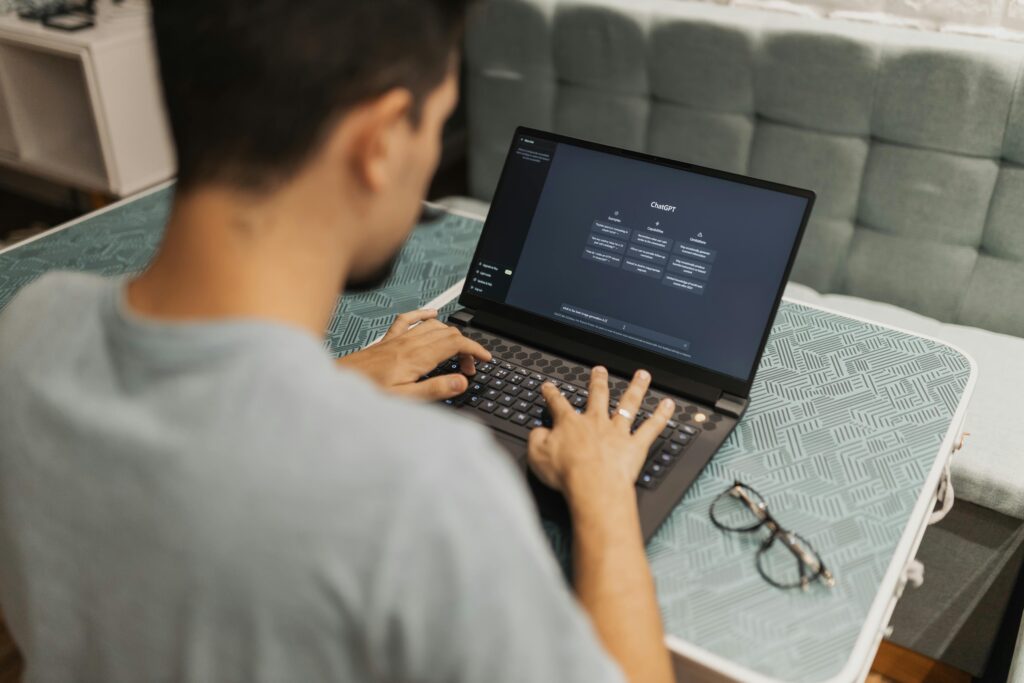

Manipulation Through AI Personalization

Social media already maximizes engagement. Affective computing could make it worse. Imagine platforms detecting loneliness or insecurity in real time and then pushing content that feeds those emotions.

Risks include:

- Reinforcing body image problems.

- Amplifying extremist or harmful content.

- Keeping teens locked in endless emotional loops.

Instead of helping teens, this could exploit their vulnerabilities.

Mental Health Concerns

Being a teenager is already complex. If every emotion is tracked by AI, it could lead to:

- Loss of authenticity: Teens acting for the algorithm, not themselves.

- Overreliance on tech: Depending on apps to “tell” them how they feel.

- Added pressure: Knowing emotions are under surveillance can intensify them.

The Bias Problem

Affective AI often misreads emotions across different cultures, skin tones, and genders. For teens, these biases can reinforce harmful stereotypes and lead to unfair treatment.

A Responsible Path Forward

Affective computing can help in some contexts, such as mental health support or adaptive learning. But when used with teenagers, the priority must be protection over profit.

What is needed:

- Transparency on how emotional data is collected and stored.

- Clear rules against commercial use of teen emotions.

- Independent audits to reduce bias and misinterpretation.

Final Thought

Teenagers need freedom to explore their emotions, not algorithms watching and scoring every move.

AI may be able to read feelings, but the real question is whether it should—especially when it comes to young people.